2d image to 3d mapping using color as depth

October 15, 2011

Idea

Recently I had a chance to learn about matrix transformations in 3d space, and I wondered if it would be possible to convert a 2d digital image into 3d, using the color of the pixels as their depth (z) value. That would mean a flat image could be mapped to a 3d space, where each pixel of the image is a point in the space. After that, looking at the 3d space "head-on" with a simple projection would result in the original image, but if the point of view is rotated by a rotation transformation, all the dark pixels float to one side, while all the light ones float to the other. What use could come out of this - I'm not sure, but the transformation would be an interesting effect to observe, and it is not that difficult to implement so I decided to try it out.

Tools

There are multiple ways in theory to implement this; really any language that allows image processing will do. I needed increased efficiency and the ability to implement my own matrix transform (as opposed to using a pre-built 3d engine and just telling it where the points should be plotted), so I decided on using the openFrameworks platform with code::blocks as the IDE. The "advanced graphics example" project was used as a starting template for this code (the only thing that was kept ended up being the 'spinPct' variable and all the lib references in the project file).

Code

The program consists of three files:

Note that to actually compile this you must have openFrameworks installed and configured properly (try compiling their sample code first). Since this was just a small exploration, the file name of the image to analyze is hard-coded in testApp.cpp, so change it there if necessary.

Results

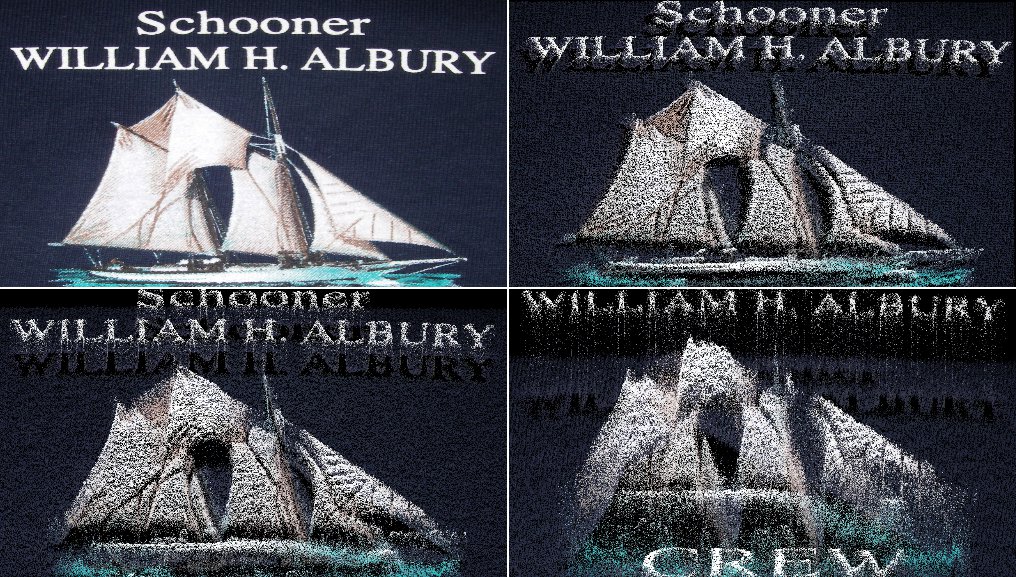

Here is one interesting result that I liked. The image is a photo of the logo on a shirt I have from the Bahamas.

The top-left corner contains the original image. Going right, it is rotated slightly up and to the right. The separation between the white letters and dark background becomes more readily visible. Next row, the first image is rotated further up but not to the right. The height difference of light/dark pixels becomes even more evident. Final image is rotated almost perpendicular to the point of view, and the schooner (and to a lesser extent waves below it) are significantly above their background. At the edges of the letters, pixels that are in-between the background and foreground are visible, they look as if they're falling from the letters to the shirt surface. Slight differences in the coloring of the background is also eveident.

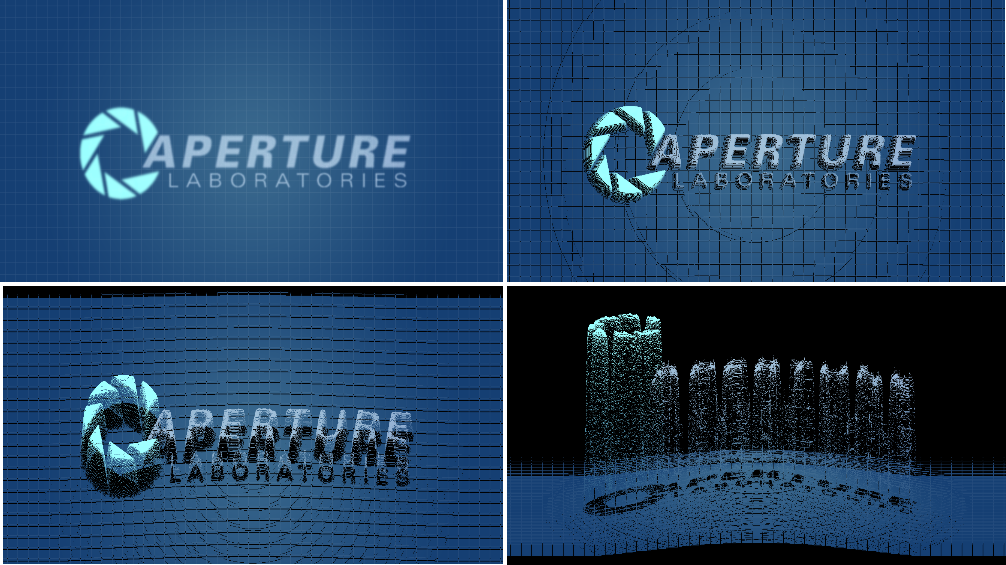

This result suggested that this program could in fact have a use in visual image analysis, because it emphasizes differences between pixel colors that otherwise would not be noticeable. It could also be used as a special effect of some sort. Below, a computer-generated png image (with much less randomness in color distribution than the photo) is analyzed in a way similar to the above.

The top-left corner is again the original image. Rotating it slightly, a circular pattern starts to emerge, along with clear differentiation between the text and background. The circular pattern is even, meaning the color gradient is computer-generated and has not been adjusted. The intermediary pixels between the text and background now are pretty evenly distributed as opposed to the previous photo, implying that a linear blur filter was applied. In the final image, there is a clearly visible curve on the background, which emphasizes a lighter 'dot' in the middle of the image (notice that this is relatively difficult to notice in the original image). The same curve shape is also visible in the text above, which means the text is in fact transparent. How's that for visual analysis?